ASCERTAIN Dataset Details and Download

Overview

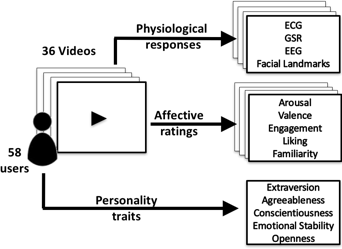

In this work we present ASCERTAIN– a multimodal databaASe for impliCit pERsonaliTy and Affect recognitIoN using commercial physiological sensors. To our knowledge, ASCERTAIN is the first database to connect personality traits and emotional states via physiological responses. ASCERTAIN contains big-five personality scales and emotional self-ratings of 58 users along with synchronously recorded Electroencephalogram (EEG), Electrocardiogram (ECG), Galvanic Skin Response (GSR) and facial activity data, recorded using off-the-shelf sensors while viewing affective movie clips. We first examine relationships between users’ affective ratings and personality scales in the context of prior observations, and then study linear and non-linear physiological correlates of emotion and personality. Our analysis suggests that the emotion–personality relationship is better captured by non-linear rather than linear statistics. We finally attempt binary emotion and personality trait recognition using physiological features. Experimental results cumulatively confirm that personality differences are better revealed while comparing user responses to emotionally homogeneous videos, and above-chance recognition is achieved for both affective and personality dimensions.

Description

Recorded persons:

58 (37 male, 21 female), mean age = 30 yearsEmployed stimuli:

36 movie video clips selected in a prior study (explained in the paper), mean length = 80s std= 20sSynchronized recorded Data:

Physiological Signal:

3-channels Electro-cardiogrm (ECG)Galvanic skin response (GSR)

Electroencephalography (EEG): single-dry EEG sensor Neuroskype

Face Response:

Facial landmark trajectories (EMO)Data evaluation:

Annotations for the quality of all data recorded from the four modalities (ECG, EEG, GSR, EMO).User data:

Personality scores for the Big 5 Personality traits:Extraversion, Agreeableness, Conscientiousness, Emotional Stabily, Openness

Additionally ratings for 50 descriptive adjectives for each subject from which the personality trait scores are calculated

Self reports (for 36 videos for 58 subjects each):

Arousal, Valence, Engagement, Liking, Familiarity

To get access to the documentation of the data, please click here!

Please Read Me!

Please make sure that you consider the following hints before downloading the shared files:- The data is shared only for research purposes.

- Commercial-related use of the data is not permitted.

- If you use any part of the shared data in any report, please make sure that you cite the "ASCERTAIN Dataset paper" in the report.

Thanks for your consideration!

Access Request

We use the Google Drive service to share the extracted features and the raw data. To grant you an access to the (extracted features or/and the raw) data, a Google id associated with your identity is needed. Please check the End User License Agreement (EULA) for details.The EULA should be downloaded, printed, signed, scanned and returned via email to ascertain.mhug [at] gmail [dot] com with the subject line "ASCERTAIN access request". Please state in your email your position and your institution. Please use your institutional email (i.e. not your Microsoft, Yahoo, etc account, unless of course you work in Microsoft, Yahoo) to submit your access request.

Shared Data

The Extracted Features that are explained in the paper and used for experimental analysis are shared here. Also the raw data from each clip and each modality can be accessed here. We used MATLAB for signal analysis. The shared files have ".mat" extensions and can be read using MATLAB/OCTAVE.

To get access to the data documentations, please click here!

Here you can download the Extracted Features and the Raw Data.

Contact Information

If you have any further questions about the dataset please contact:

ascertain.mhug [at] gmail [dot] com